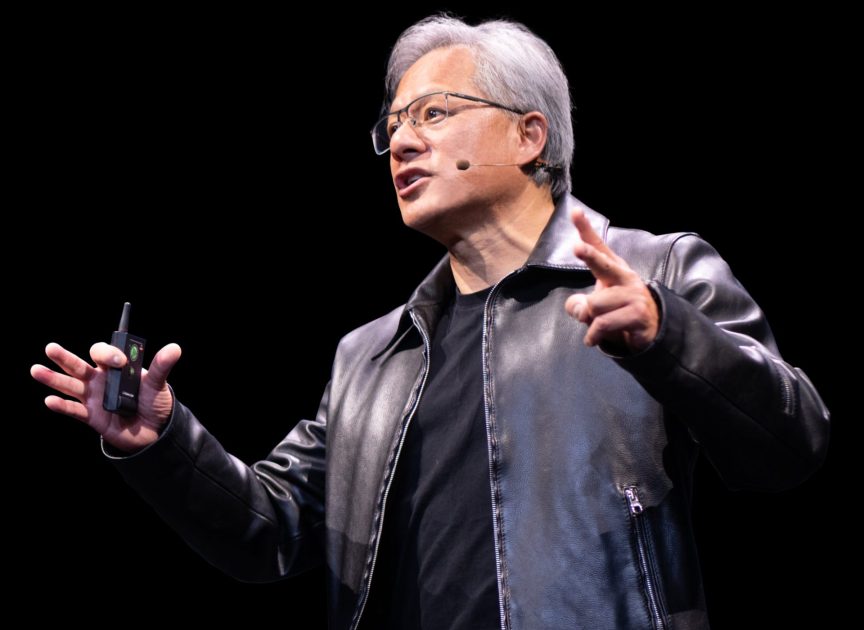

At Nvidia’s developer conference in San Jose on Monday, CEO Jensen Huang introduced the company’s new AI chips and software with remarkable improvement in performance and capability.

The new generation of AI graphics processors is named Blackwell, and the first chip in this lineup is designated as the ‘GB200’, which is scheduled to be shipped later this year.

The Nvidia CEO announced that the GB200, with its two B200 graphics processors and Arm-based central processor, achieves 20 petaflops in AI performance, a huge improvement over the previous Hopper H100, which managed only 4 petaflops.

It’s a game-changer in AI computing if Huang is right.

“Hopper is fantastic, but we need bigger GPUs,” CEO Huang said at the company’s developer conference in California.

AI models are getting bigger and more intricate along with rapid advancements. There’s a high demand for robust hardware solutions due to the growth of cloud computing and big data analytics, and Nvidia’s Blackwell chips aim to meet this demand effectively, enabling AI companies to train larger and more complex models with ease.

The company has also introduced NIM (Nvidia Inference Microservice), a revolutionary software solution designed to simplify the deployment of AI models. NIM simplifies the process of running AI software on Nvidia GPUs, making it more accessible, even on older models.

Nvidia executives say that Nvidia is transitioning from solely being a chip manufacturer to becoming a comprehensive platform, similar to Microsoft or Apple, where other companies can develop and deploy AI software.

“Blackwell’s not a chip, it’s the name of a platform,” Huang said.

“The sellable commercial product was the GPU and the software was all to help people use the GPU in different ways,” said Nvidia enterprise VP Manuvir Das in an interview. “Of course, we still do that. But what’s really changed is, we really have a commercial software business now.”

Das said Nvidia’s new software will make it easier to run programs on any of Nvidia’s GPUs, even older ones that might be better suited for deploying but not building AI.

“In my code, where I was calling into OpenAI, I will replace one line of code to point it to this NIM that I got from Nvidia instead,” Das added.

In the conference, Nvidia also introduced a system capable of handling a massive 27-trillion-parameter model, surpassing even the largest existing models like GPT-4, with 1.76 trillion parameters. However, the company didn’t disclose the cost, but analysts estimate similar systems range from $25,000 to $200,000.

The goal is to encourage customers to subscribe to Nvidia enterprise at $4,500 per GPU per year for a license, according to Nvidia officials. Nvidia collaborates with AI companies like Microsoft and Hugging Face to ensure their models run smoothly on Nvidia chips.

“It’s all made at home,” CEO Huang came up with a new way to introduce new simulations from the Omniverse system, emphasizing that the clips were generated, not animated.

According to Nvidia, developers can deploy these models on their servers or Nvidia’s cloud without much hassle with NIM.

The icing on the cake, of course, was the demonstration of robotics and how Omniverse can empower them. Along with the demonstration were two cute mini robots – one green and one orange – which didn’t seem to be obeying the CEO very well.

Ray Dalio says that AI could bring a 3-day workweek, but here's the catch - Investmentals

[…] Nvidia CEO Jensen Huang announces new generation AI chips and software […]

NVIDIA vs Bitcoin (and Gold) in the Last Four Years - Investmentals

[…] is not shy about showing off as a GPU giant powered by AI. In the most recent GTC event, it showcased significant AI innovations, including dancing robots, after […]